Microsoft’s latest AI chip goes head-to-head with Amazon and Google

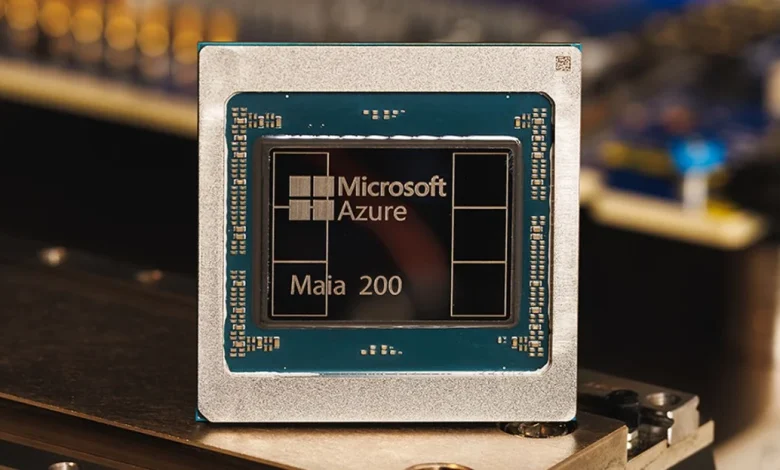

Microsoft is announcing a successor to its first in-house AI chip today, the Maia 200. Built on TSMC’s 3nm process, Microsoft says its Maia 200 AI accelerator “delivers 3 times the FP4 performance of the third generation Amazon Trainium, and FP8 performance above Google’s seventh generation TPU.”

Each Maia 200 chip has more than 100 billion transistors, which are all designed to handle large-scale AI workloads. “Maia 200 can effortlessly run today’s largest models, with plenty of headroom for even bigger models in the future,” says Scott Guthrie, executive vice president of Microsoft’s Cloud and AI division.

Microsoft will use Maia 200 to host OpenAI’s GPT-5.2 model and others for Microsoft Foundry and Microsoft 365 Copilot. “Maia 200 is also the most efficient inference system Microsoft has ever deployed, with 30 percent better performance per dollar than the latest generation hardware in our fleet today,” says Guthrie.

Microsoft’s performance flex over its close Big Tech competitors is different to when it first launched the Maia 100 in 2023 and didn’t want to be drawn into direct comparisons with Amazon’s and Google’s AI cloud capabilities. Both Google and Amazon are working on next-generation AI chips, though. Amazon is even working with Nvidia to integrate its upcoming Trainium4 chip with NVLink 6 and Nvidia’s MGX rack architecture.

Microsoft’s Superintelligence team will be the first to use its Maia 200 chips, and the company is also inviting academics, developers, AI labs, and open-source model project contributors to an early preview of the Maia 200 software development kit. Microsoft is starting to deploy these new chips today in its Azure US Central data center region, with additional regions to follow.