Introducing Rho-alpha, the new robotics model from Microsoft

For decades, robots have excelled in structured settings like assembly lines, where tasks are predictable and tightly scripted.

“The emergence of vision-language-action (VLA) models for physical systems is enabling systems to perceive, reason, and act with increasing autonomy alongside humans in environments that are far less structured.”

– Ashley Llorens, Corporate Vice President and Managing Director, Microsoft Research Accelerator

Physical AI, where agentic AI meets physical systems, is poised to redefine robotics in the same way that generative models have transformed language and vision processing.

Today, we are announcing Rho-alpha (ρα), our first robotics model derived from Microsoft’s Phi series (opens in new tab) of vision-language models.

We invite organizations interested in evaluating Rho-alpha for their robots and use cases to express interest in the Rho-alpha Research Early Access Program (opens in new tab). Rho-alpha will also be made available via Microsoft Foundry at a later date.

Rho-alpha translates natural language commands into control signals for robotic systems performing bimanual manipulation tasks. It can be described as a VLA+ model in that it expands the set of perceptual and learning modalities beyond those typically used by VLAs. For perception, Rho-alpha adds tactile sensing, with efforts underway to accommodate modalities such as force. For learning, we are working toward enabling Rho-alpha to continually improve during deployment by learning from feedback provided by people.

Through these advancements, we aim to make physical systems more easily adaptable, viewing adaptability as a hallmark of intelligence. We believe robots that can adapt more easily to dynamic situations and to human preferences will be more useful in the environments in which we live and work and more trusted by the people who deploy and operate them.

Prompt: “Push the green button with the right gripper”

Prompt: “Pull out the red wire”

Prompt: “Flip the top switch on”

Prompt: “Turn the knob to position 5”

Prompt: “Rotate the BusyBox clockwise”

Prompt: “Move the top slider to position 2”

The footage above demonstrates Rho-alpha interacting with the BusyBox, a physical interaction benchmark recently introduced by Microsoft Research, cued by natural language instructions. (The videos show the robot operation at real-time speed.)

Our team is working toward end-to-end optimizations of Rho-alpha’s training pipeline and training data corpus for performance and efficiency on bimanual manipulation tasks of interest to Microsoft and our partners. The model is currently under evaluation on dual-arm setups and humanoid robots. We will publish a technical description in the coming months.

Rho-alpha achieves tactile-aware behaviors infused with vision-language understanding through a process of co-training on trajectories from physical demonstrations and simulated tasks, together with web-scale visual question answering data. We plan to use the same blueprint to continue extending the model to additional sensing modalities across a variety of real-world tasks.

“While generating training data by teleoperating robotic systems has become a standard practice, there are many settings where teleoperation is impractical or impossible. We are working with Microsoft Research to enrich pre-training datasets collected from physical robots with diverse synthetic demonstrations using a combination of simulation and reinforcement learning.”

– Professor Abhishek Gupta, Assistant Professor, University of Washington

Simulation plays a key role in our approach to overcome the general lack of pretraining-scale robotics data, especially data containing tactile feedback and other less common sensing modalities. Our training pipeline generates synthetic data via a multistage process based on reinforcement learning using the open NVIDIA Isaac Sim (opens in new tab) framework. We combine these simulated trajectories with commercial and openly available physical demonstration datasets.

“Training foundation models that can reason and act requires overcoming the scarcity of diverse, real-world data. By leveraging NVIDIA Isaac Sim on Azure to generate physically accurate synthetic datasets, Microsoft Research is accelerating the development of versatile models like Rho-alpha that can master complex manipulation tasks.”

– Deepu Talla, Vice President of Robotics and Edge AI, NVIDIA

While extending perception capabilities can enable Rho-alpha to adjust a robot’s course of action during operation, robots can still make mistakes that are hard for them to recover from. Human operators can set a robot back on track using intuitive teleoperation devices such as a 3D mouse. We are focused on tooling and model adaptation techniques to enable Rho-alpha to learn from corrective feedback during system operation.

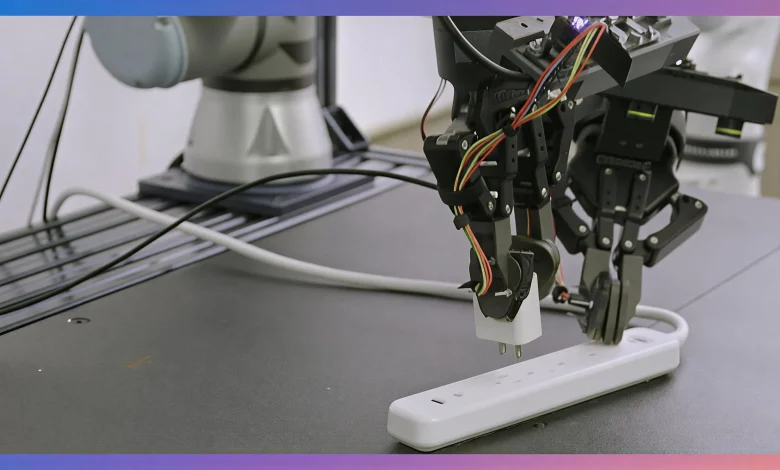

Prompt: “Pick up the power plug and insert it into the bottom socket of the square surge protector”

Prompt: “Place the tray into the toolbox and close the toolbox”

Prompt: “Take the tray out of the toolbox and put it on the table”

The videos above show a tactile sensor-equipped dual-UR5e-arm setup controlled by Rho-alpha performing plug insertion and toolbox packing. In the plug insertion episode, the right arm has difficulty inserting the plug into the outlet and is helped by real-time human guidance. (The videos show the robot operation at real-time speed.)

Robotics manufacturers, integrators, and end-users have unique insights into the use-cases and scenarios where emerging physical AI technologies offer transformative potential. To empower these stakeholders, we are working toward foundational technologies like Rho-alpha, along with associated tooling, that will enable them to train, deploy, and continuously adapt their own cloud-hosted physical AI using their own data for their own robots and scenarios.

If you’re interested in experimenting with and helping shape the future of our Physical AI foundations and tools, express your interest in our Research Early Access Program.